People stopped using AI during L.A.'s wildfires

AI had a trust problem when lives and homes were on the line.

People turned away from AI when lives and homes were on the line during L.A.’s wildfires, according to an AI usage survey I sent this week.

One of my readers who normally uses generative AI for content creation and synthetic search summaries, Respondent #21, had to evacuate his home during L.A.’s wildfires. He stopped using AI as the crisis unfolded:

There was no reason to use it. I was getting info from more reliable sources, like watch duty. I've had problems in the past with AI's accuracy, and honestly, it's not much use beyond as a toy, and some quick Google summaries, but even those are often dodgy.

The couple dozen responses I got to a quickie reader survey about AI use during L.A.’s wildfires aren’t statistically significant, nor necessarily reflective of the breadth of AI uses and opinions.

But a consistent narrative emerged about when people trust — or don’t trust — the AI tech already widely embedded in our information economy.

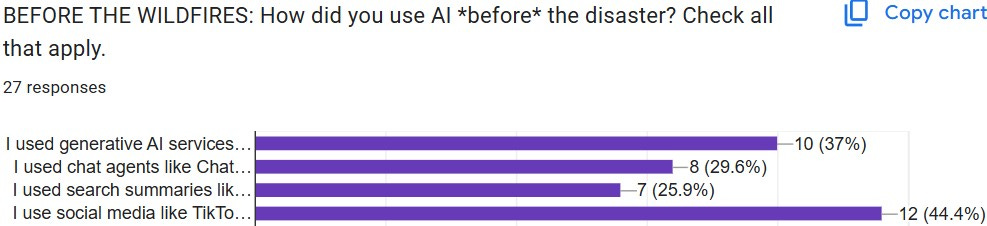

A lot of you use AI already, usually pretty quietly, like (1) ChatGPT for writing help or (2) research, (3) synthetic summaries from Google or Anthropic for searches, or (4) recommendation algorithms on TikTok and X:

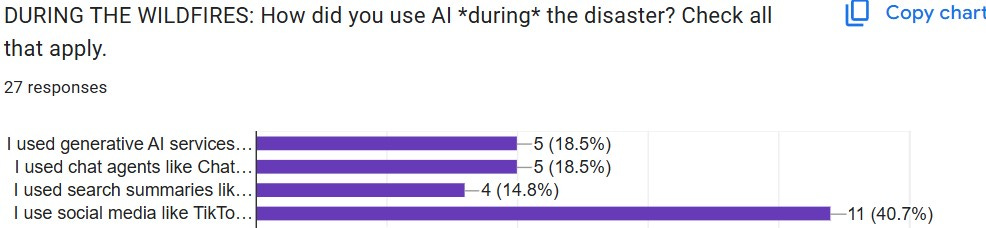

But AI usage dropped off during the L.A. wildfires, except for social media recommendation algorithms:

Respondent #9, an affected Angeleno, was a frequent AI user and continued to be a user through the crisis — but not for anything related to the fires, instead leaning on KTLA (“awesome reporting!”), Watch Duty and some social media. Regarding AI:

It's not at all reliable for news, especially real time, life-threatening news. It can be biased as it trains on biased data like Reddit and social. In simple terms - it can't be trusted. … I've been using AI for a couple of years so I'm probably a little more skeptical of AI's usefulness.

Respondent #14 lives near an evacuation area:

Since I have to fact check it anyhow, why waste time using it. … I’m not opposed to AI, just highly skeptical and not willing to trust it in an emergency situation.

Respondent #10, whose brother’s home was destroyed in the Palisades, uses “AI for many other things, but nothing related to the wildfires,” switching back to legacy media for fire coverage:

I was glued to the television, and to a lesser extent online newspapers, bluesky and the Watch Duty app. … This was very much a local news TV event for me, and I almost never watch local news anymore.

Respondent #5 lives two miles from the Eaton fire and feared evacuation. Not a heavy AI user (apart from synthetic search summaries), they turned even more intentionally toward human-generated information:

Watch Duty was a lifesaver. I was so relieved when I saw the names of actual reporters (sometimes they were retired firefighters) it felt like something I could trust.

As you could probably tell, Watch Duty came up a lot in my survey responses. I’ve written about the influence of Watch Duty during this crisis, which is surprisingly human-forward for a data-powered app. It has reporters and only reportedly uses AI internally for alerts to staff, prizing accuracy over speed.

Respondent #3, who evacuated from his home in Monrovia and is not a heavy AI user, told me that synthetic AI summaries were too imprecise for the kind of street-by-street information he wanted, and a little too inhuman. “I was actively trying to find things that seemed more thoughtfully produced,” he wrote. “It was a comfort thing.”

I asked him, via email, if it was important to him to know that a human was on the other side of whatever he was looking at. He wrote:

That’s a huge part of it. Yes. It’s partly “the devil you know” also. I haven’t dealt with AI enough to know what it’s capable of and therefore it hasn’t earned my trust yet. With a person, I know their capabilities, more or less, and what to expect and not expect. I know how a person can filter information.

Respondents from outside L.A. looking for information about the fires, not for reasons of safety, distrusted AI because they sought authenticity. Respondent #2, a painter and not a regular AI user, actually “became more suspicious of AI” during the fires and “wondered if the images I saw online were AI generated and I hated that I had to wonder this.” Respondent #15 was primarily interested in “first-hand accounts, not summaries or composite results, so it wouldn't be helpful.”

Algorithm-powered social media served its own chaotic role for this, as Respondent #16 put it:

As far as social media goes, it was mostly as the memes suggest: lots of uninformed finger-pointing, politicized memes, and Schadenfreude, but also interviews of victims, press conferences of various officials, and videos of firefighter pilots scooping and dumping water over heroic music. Generally, I would say TikTok had the greatest variety of the above.

Not all AI use was repudiated. Respondent #13, an Angeleno, brought up a generative AI use that cropped up during the wildfires:

I know of friends (I might have done so once too myself) who asked ChatGPT either what to write to friends who've lost their homes or have to evacuate because we couldn't find the right words to express our condolences and share in their grief, or for it to check if our text strikes the right tone. It's unfathomable.

Respondent #17, affected by the fires, believed AI to still be “helpful for general search (e.g. does this air filter remove asbestos) but I don't know that I trust it to pull the latest info (e.g. what is the Eaton fire doing right now).”

Respondent #27 wrote:

My son was concerned about whether the ash at our house (which fell into his food) could be affecting his health enough to give him headaches or other health problems. I used [OpenAI’s] o1 to do some high level modeling of the contents of the ash, as well as how much a person would have to ingest in order to actually affect their health. This was able to ease my son's concerns for the most part.

This is where I step in as your papa-bear newsletterer and say I am grateful for all these responses for helping understand how people used AI during a disaster. But this last reply, which arrived late, made me anxious. OpenAI’s Terms of Use say results “may not always be accurate” and strictly prohibit use of outputs for making “medical” decisions. If it were my family member, I’d ask our doctor. To readers, the best I can do is direct you to some experts.

We seek knowledge for its certainty, and crave certainty for its comfort. Beneath the shadow of ash over Los Angeles, danger is present, but our individual risks are beyond precise measure, thus beyond certitude from human or machine alike. The authorities and the AI terms of service tell us to be careful. It’s a human reaction to still wonder: How careful, really?

I've been using (the free version of) Perplexity for some queries that I used to just "Google" and then scan the results to form an answer. I liked how it would do the scanning and answer-forming for me... until...

This past week I asked Perplexity some questions... some related to the fires, some not– and was given some answers that were just straight up WRONG. And when I'd go back to my old method of "googling" to fact-check, it seemed like there was an abundance of information giving the correct answers that Perplexity somehow missed, or misinterpreted. And this seriously happened 3 times in a row. And they weren't all just topics related to breaking / developing news.

I know that Perplexity is just one of many AI tools, but right now I'm suspicious of any answer an AI gives me... especially relating to news

Fascinating. People are ensconced in information silos and will believe all sorts of conspiracies relying on untruths. However, when the chips are down and the wolf is at the door, or the fire threatens their life's endeavors and family, they want facts. Where can one turn to find facts in this era of Post-Truth? Why to real people on your local news channel. The local newspaper reporters who are on the line and are also real people. And outfits like WatchDuty. My nephew works for CalFire. I respect his knowledge and rationale for crews' responses to dangerous and life threatening situations. Algorithms, AI, the techBros? Not so much. Talk radio hosts? They are great for entertainment. Conflagrations are now entertainment. Let's burn it all down. The algorithms can help by maximizing user "engagement".